Example gallery¶

We provide here different examples on how to use the GemClus library, from clustering to variable selection.

General examples¶

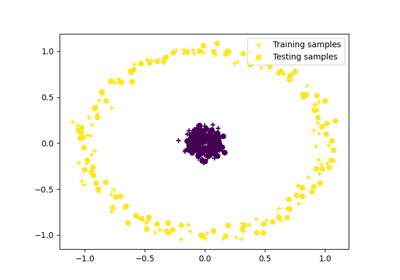

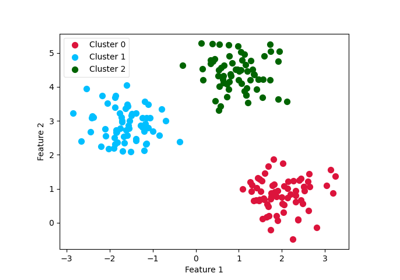

An introducing example to clustering with an MLP and the MMD GEMINI

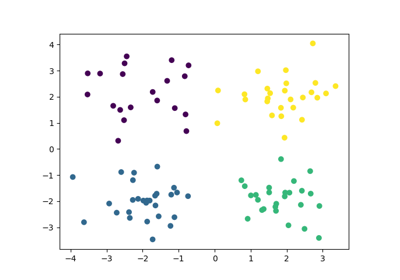

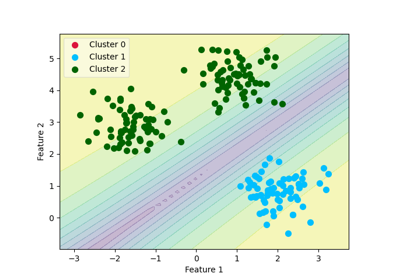

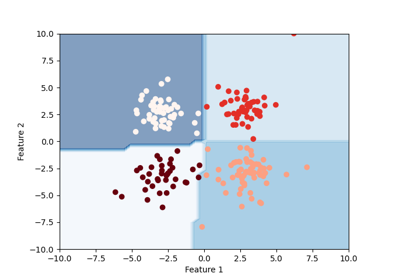

Example of decision boundary map for a mixture of Gaussian and low-degree Student distributions

Clustering with the squared-loss mutual information

Drawing a decision boundary between two interlacing moons

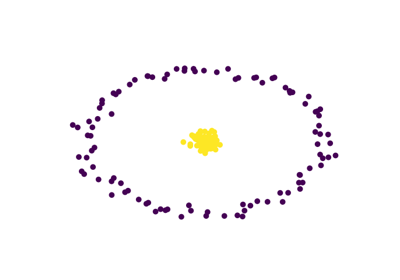

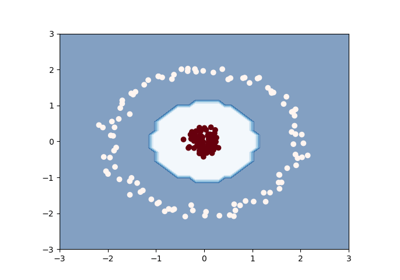

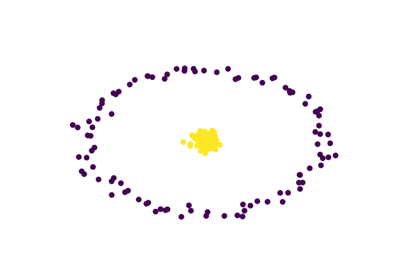

Comparative clustering of circles dataset with kernel change

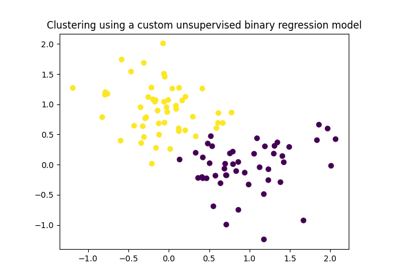

Extending GemClus to build your own discriminative clustering model

Feature selection¶

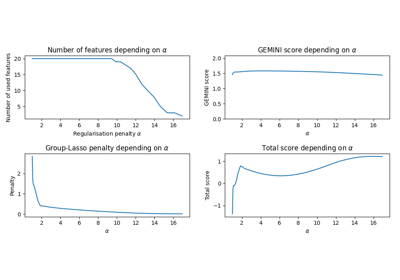

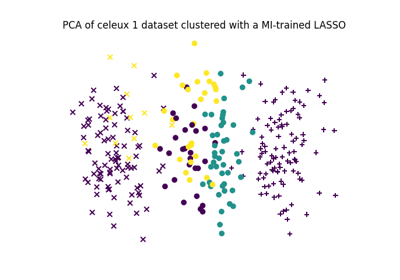

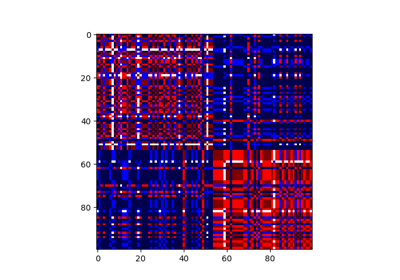

Feature selection using the Sparse MMD OvO (Logistic regression)

Feature selection using the Sparse Linear MI (Logistic regression)

Consensus clustering¶

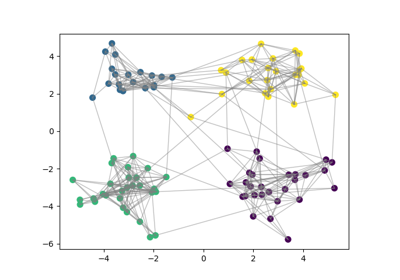

Consensus clustering with linking constraints on sample pairs

Scoring with GEMINI¶

Trees¶

Building a differentiable unsupervised tree: DOUGLAS

Building an unsupervised tree with kernel-kmeans objective: KAURI